Artificial Intelligence is transforming the way businesses operate. From predictive analytics and process automation to generative content creation, AI is becoming a foundational part of modern enterprise solutions. SAP, known for its business-centric software, brings AI capabilities to enterprises through SAP AI Core and SAP AI Launchpad, complemented by a growing suite of generative AI tools.

This blog explores what SAP AI is, the roles of SAP AI Core and AI Launchpad, foundational concepts, and how SAP's Generative AI capabilities expand the horizon.

What is SAP AI?

SAP AI refers to the suite of technologies and services that enable the development, deployment, and operationalization of AI within the SAP Business Technology Platform (BTP). It is designed to help customers infuse intelligence into their business processes using both classical machine learning and newer generative AI models.

With SAP AI, enterprises can automate tasks, improve decision-making, and build smarter applications. SAP AI supports end-to-end AI lifecycle management, from data preparation to deployment and monitoring.

What is SAP AI Core?

SAP AI Core is the technical backbone for executing AI workloads. It is a runtime environment where developers and data scientists can train, deploy, and manage their custom AI models using familiar tools and open-source frameworks like TensorFlow, PyTorch, or scikit-learn.

Key features of SAP AI Core include:

-

Model training and deployment at scale

-

Full lifecycle operations: versioning, scheduling, monitoring

-

Infrastructure-agnostic execution (can run on AWS, Azure, etc.)

-

API-driven architecture

SAP AI Core does not have a graphical UI; it is intended for technical users comfortable working with YAML configurations and DevOps-style workflows.

What is SAP AI Launchpad?

SAP AI Launchpad is the central user interface for managing AI use cases across different runtimes, including SAP AI Core. It acts as a control plane where both technical and business users can interact with models, monitor deployments, manage artifacts, and orchestrate generative AI flows.

Some of its key capabilities include:

-

Managing AI scenarios and their lifecycle

-

Viewing executions, deployments, and models

-

Accessing the Generative AI Hub for prompt-based workflows

-

Managing connections to runtimes like SAP AI Core

-

Multi-tenant environment with workspace separation

Think of AI Launchpad as your AI project cockpit.

Understanding Core Concepts

If you're new to AI, here’s a simplified explanation of some important terms you’ll encounter while working with SAP AI.

Artifact: Think of this like a file. It can be a dataset, a trained model, or results. These are the items your AI process either uses or creates.

Executable: A ready-to-run template (written in YAML format) that performs a task, like training a model or running a prediction.

Workflow Executable: A set of executables connected like steps in a recipe. It defines an entire AI process or pipeline.

Deployment Template (or Serving Executable): A special type of executable that describes how to turn a trained model into a live service. It tells the system how to serve the model.

Deployment: When you use a deployment template and fill in the details, you get a deployment, a live version of your model with an endpoint you can call to get predictions.

Configuration: Think of it like a form where you specify which model, which data, and which settings to use when running an executable or deployment.

Execution: A single run of an executable, for example, when you're training a model using specific data and settings.

Scenario: A container that groups everything related to a specific AI use case, such as models, configurations, and executables.

Resource Group: Like a project folder in the cloud. It holds all your AI assets for a given team or use case, separate from others.

Embedding: A smart way to turn text (or other data) into numbers that a machine can understand. It helps models grasp the meaning behind the data.

Knowledge Graph: A map of connected facts and concepts that helps AI understand relationships between things, like how "Customer" and "Order" are related.

YAML file: A human-readable configuration file. In SAP AI, YAML is used to define how things like training or deployments should run, what inputs to use, what scripts to call, and what outputs to expect.

Pipeline: A series of connected steps that handle an AI task from start to finish, like getting data, training a model, evaluating it, and deploying it. Each step in a pipeline can be defined as an executable.

These concepts come together to define how models are developed, deployed, and managed within SAP AI systems.

What is SAP Generative AI?

SAP Generative AI refers to capabilities provided via the Generative AI Hub, part of SAP AI Launchpad. It enables users to access, experiment with, and manage foundation models such as GPT, Claude, Gemini, and Llama, directly from SAP BTP.

This allows business users to:

-

Run natural language prompts against foundation models

-

Customize and manage prompts

-

Use orchestration to chain together pre/post-processing steps

It supports various foundation model providers like OpenAI, Anthropic, Meta, and Google through services like AWS Bedrock and GCP Vertex AI.

SAP AI Core vs. SAP Generative AI

SAP AI Core and SAP Generative AI serve different but complementary purposes.

-

SAP AI Core is focused on classical machine learning. It’s designed for developers who need control over training, data processing, and custom deployments.

-

SAP Generative AI (via the Gen AI Hub) simplifies access to powerful language models. It is built for quicker integration into apps using prompts and prebuilt orchestration tools.

| Feature | SAP AI Core | SAP Generative AI |

|---|---|---|

| Purpose | Full ML lifecycle (train, deploy) | Prompt-based workflows with LLMs |

| Users | Developers, ML Engineers | Business & App Developers |

| Interface | YAML, CLI, APIs | GUI via Launchpad |

| Flexibility | Fully customizable pipelines | Prebuilt orchestration modules |

| Model Type | Custom models | Foundation models (GPT, Claude, etc.) |

Working with SAP AI Core

You can perform nearly all SAP AI Core steps through multiple interfaces:

-

SAP AI Launchpad UI

-

Postman (using the SAP AI Core collection of endpoints)

-

SAP AI Core SDK

-

AI API Client SDK

This flexibility allows users to choose their preferred tool while achieving the same outcomes. Here's the typical workflow:

-

Connecting SAP AI Core and SAP AI Launchpad: Establish the link between your runtime and the management interface.

-

Set up Git Repository: Store your code, YAML templates, and related artifacts.

-

Create Application Using a YAML File: This file acts as a workflow template and defines your pipeline logic.

-

Scenario and Executable Creation: When an application is created, a scenario is automatically generated, containing an executable.

-

Depending on the YAML configuration, the scenario may contain a workflow executable or a serving executable.

-

A workflow executable is a template for running a job (like training), while a serving executable is for deploying it (like inference).

-

-

Create a Configuration for Execution: Select the scenario, version, and workflow executable to configure and run an execution.

-

Create a Deployment: Select the scenario, version, serving executable, and configuration to deploy your model or code.

-

Schedule Executions: Optionally, create a schedule to automate executions using cron-like expressions.

Still Not Clear? Here's a Simplified View of What We’re Doing with SAP AI Core

-

Write a script: This script can be in Python or another language and performs your desired task.

-

Create a YAML file: This is your configuration file. It tells SAP AI Core where your script is, what parameters to pass, and how to run it.

-

Create a Scenario: This links everything together using the YAML file.

-

Run an Execution: You trigger the execution of your script by creating a configuration and running it.

-

Deploy the Script: If needed, you deploy it by creating a deployment that exposes your logic as a service.

This flow makes SAP AI Core highly flexible, scalable, and developer-friendly, while still integrating neatly with enterprise-grade tools like SAP AI Launchpad.

To explore further, you can visit the following official SAP documentation:

If you'd like to try using SAP AI Core yourself, follow this hands-on tutorial:

That was all about SAP AI Core, but how can we utilise existing LLMs for our business processes? For that, we have SAP Generative AI Hub.

Working with SAP Generative AI Hub

Access to generative AI models falls under the global AI scenarios foundation-models and orchestration. These scenarios are managed by SAP AI Core. You can access individual models as executables through serving templates. To use a specific model, you simply choose the corresponding template that matches the foundation model you want to work with.

What is Orchestration?

The orchestration service operates under the global AI scenario orchestration, also managed by SAP AI Core. It enables access to various generative AI models through a unified structure for code, configuration, and deployment.

What makes orchestration powerful is the harmonized API, which lets you switch between foundation models without needing to change your client application code. To use multiple models or different versions, you only need to create one or more orchestration deployments in your default resource group.

What is Foundation Models?

SAP provides direct access to popular large language models (LLMs) through these global scenarios. These models include options like OpenAI's GPT, Anthropic's Claude, Google's Gemini, Meta's LLaMA, and others. The integration allows business users and developers to quickly embed generative AI into their applications without managing the complexity of model training or infrastructure.

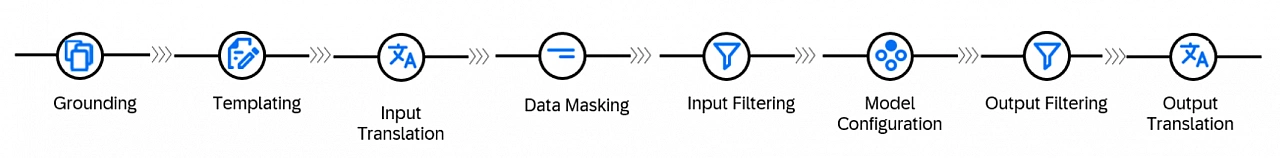

Orchestration Workflow Steps

Before diving into the technical steps, let’s clarify a few concepts like data masking and content filtering, which are vital for privacy and safety when working with Generative AI in SAP.

-

Choose a resource group for deploying the orchestration workflow.

-

Create a configuration using the global scenario

orchestrationand the service executableorchestration. -

Create a deployment of that configuration. Once we have a running orchestration deployment, we go further to orchestrate the existing LLMs as per our business use.

-

Grounding: Converts documents into vector representations. The indexing pipeline breaks unstructured or semi-structured data into smaller chunks. Each chunk is transformed into embeddings and stored in a vector database, allowing models to search data and return precise, context-aware answers. SAP Gen AI uses grounding to access real-time business data from sources like SAP systems, SharePoint, or internal repositories.

-

Prompt templating: Enables you to compose prompts and define placeholders to be filled at runtime.

-

Input translation: Allows you to translate LLM text prompts into a chosen target language.

-

Data masking: This ensures that sensitive personal information (like names, emails, IDs) is hidden before it’s sent to the model. End users won’t see the original values; they will see masked placeholders like

MASKED_ENTITYorMASKED_ENTITY_IDin both the input and model response. For example, if a user prompt includes a person's name, the model will only seeMASKED_ENTITY, protecting privacy.-

Anonymization removes original data completely, it cannot be restored.

-

Pseudonymization hides data but allows it to be unmasked in the final output if needed.

-

-

Input filtering: This step checks the content before it goes to the model. It protects against unsafe inputs like hate speech, violence, or explicit content. If a prompt violates safety rules, the model will not receive it. Filtering is handled by:

-

Azure Content Safety: Flags and scores categories like Hate, Violence, Sexual, and Self-Harm.

-

Llama Guard 3: Checks more nuanced content like election-related, crime-related, or privacy risks.

Users do not directly interact with the filters, but if input is blocked or altered, they may receive a system message indicating the input was filtered due to safety policies. Optional module to screen input/output for safety using:

-

Azure Content Safety: Classifies text in categories like Hate, Violence, Sexual, Self-Harm with severity levels.

-

Llama Guard 3: Detects harmful categories such as crimes, self-harm, hate, elections, etc.

-

Multiple filters can run together, but only one of each type can be configured.

-

-

Model configuration: Mandatory module to send the prompt to a foundation model and return a response.

-

Output filtering: Similar to input filtering but applied to the model's output.

-

Output translation: Allows translation of the model's output into a selected target language.

To try this orchestration flow yourself, check out: Consumption of GenAI models Using Orchestration – A Beginner's Guide

To try foundation model flow yourself, check out: Foundation Model Tutorials

Thank you for taking the time to read this blog. I hope it gave you a clear understanding of how SAP AI Core and SAP Generative AI Hub work and how you can start using them in your projects.

If you prefer a short video summary of this blog, watch this overview on YouTube: SAP Generative AI - Overview Video

To dive deeper into SAP’s AI capabilities and explore tutorials, demos, and real-world use cases, check out the full YouTube playlist: SAP Business AI - YouTube Playlist.

Thanks for reading :)